Overcoming Challenges and Enhancing Stability for a Global Hospitality Platform

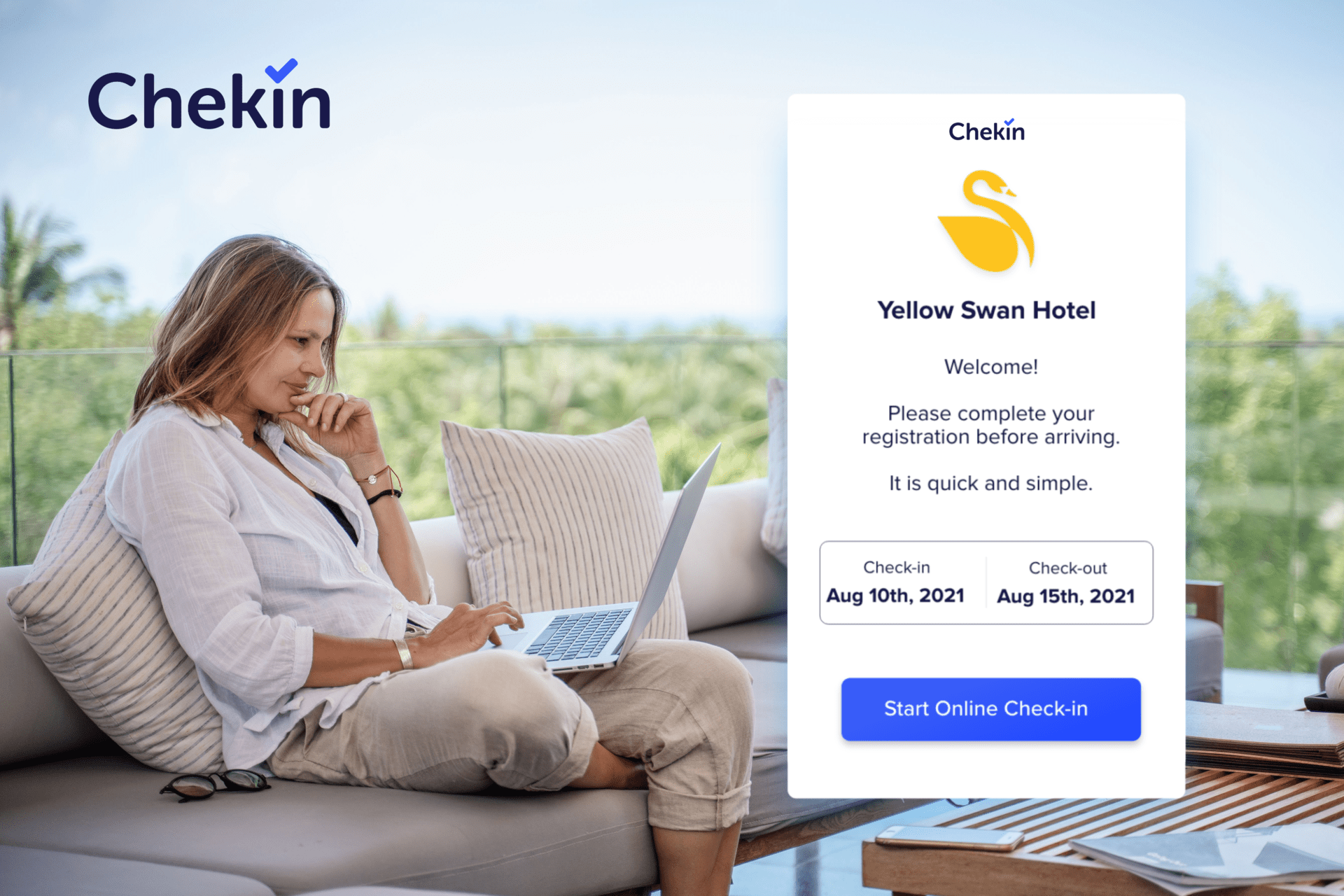

Chekin is a platform that facilitates guest check-in, offering the benefit of a frictionless process to the tourism industry. By integrating with various partners, it saves time and money. The system automates the check-in and check-out processes across different lodging reservation systems, ranging from hotels to campgrounds.

The Challenge

In our initial contact with Chekin, we encountered urgent issues to address. The platform was unstable, multiple services were failing, and the complexity of the existing architecture, combined with minimal monitoring, made it difficult to pinpoint errors. This led to delays in resolving incidents. Previous implementations didn’t maintain a valid state in repositories, necessitating reverse engineering to version the resource states.

Standardize the working methodology. Understand and document the architecture. Segment accounts and resources to provide security while enabling developers to self-provision necessary resources. Eliminate manual and repetitive processes. Issues A large number of similar applications spread across different repositories with inadequate documentation. Poor practices in service segmentation, with over 100 services in a single namespace, leaving resources fully exposed to the internet. Manual and non-versioned manifest processes. Pipelines existed to automate the build/deploy process, but often the state defined in the repository didn’t match the actual implementation. All in-use images were tagged as “latest,” requiring comparisons across multiple resources and searches in various repositories/registries to identify the exact version implemented. Kubernetes was used as the orchestrator, but scaling was handled by a script, not utilizing the benefits of native HPA. Custom metric-based scaling was also implemented. The asynchronous architecture relied on a RabbitMQ deployed within the cluster, causing critical service failures. Backups and scalability were lacking. Objectives Implement automated CI/CD workflows to reduce repetitive work and provide traceability for changes. Document all infrastructure, services, and deployment processes for new services. Implement a monitoring system to facilitate issue evaluation.

The Solution

Since the client wasn’t fully aware of all their services or the architecture built over the years by various team members, a decision was made to reconstruct the infrastructure and migrate it to a known and pre-documented setup.

This involved rebuilding the networking foundations (VPCs, subnets, route tables) and deploying new clusters using infrastructure as code, along with dependencies like relational and non-relational databases, queuing services, etc.

CI/CD was migrated to Github Actions, and service deployment was managed using Helm to simplify and standardize manifests. For cluster migration, an API Gateway was implemented, allowing gradual migration of APIs without major issues and enabling quick rollback to the previous infrastructure if needed.

A fully customized stack was implemented for logging, monitoring, and alerts, including automated notifications for both infrastructure and business metrics.

The Results

- Deployment processes for all applications were automated.

- Developer tasks were streamlined, making the creation of new resources much easier.

- Incident frequency decreased from daily occurrences across multiple services to sporadic issues in specific services. This allowed progress in documenting non-standard services.

- Incident detection time was reduced due to the implementation of proper monitoring. Significant time savings were achieved in incident resolution. Bottlenecks were eliminated, networks were segmented, permissions were managed, and backups were implemented for critical services with persistent data.